Managing AWS IAM in GitOps Style

Are you new to AWS & looking for a project to get your hands dirty? This beginner-friendly AWS project focuses on managing AWS IAM using GitOps

Problem

In the modern era of cloud computing, managing infrastructure has evolved beyond manual configurations to embrace GitOps, a paradigm rooted in Infrastructure as Code (IaC). In this comprehensive guide, we'll delve into managing AWS Identity and Access Management (IAM) using a GitOps approach, facilitated by tools like Terraform and Docker, as well as key AWS services. If you're new to AWS, this guide serves as an ideal hands-on introduction, covering essential AWS services like Lambda, EventBridge, CloudTrail, CloudWatch, ECR, and S3.

Prerequisites

AWS account

AWS CLI

Github account

Terraform

Docker

Task

In this blog, we will try to build a gitops-driven (IaC) approach for AWS IAM management. If anyone makes a change to IAM Roles/Policies/SCPs (Service Control Policies), update the change to Github. If someone makes a change in Github, update the policy configuration on AWS.

For this demo, the code is available here - aws-iam-gitops

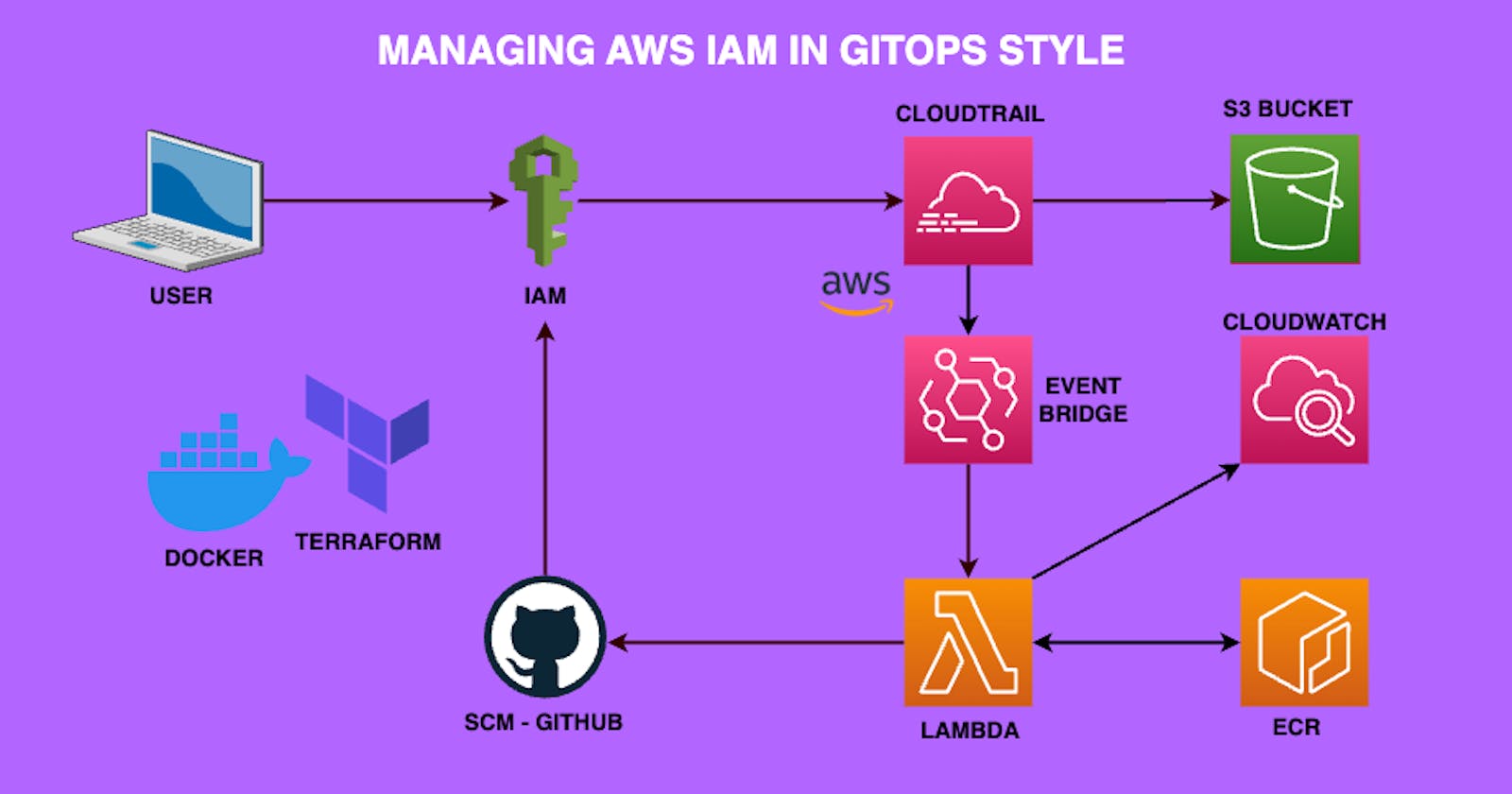

Architecture

First, we need to understand the components required to achieve our goal. This is the architectural overview of the system to be built.

Components

GitHub

This is where all the information on IAM Roles, Policies & SCPs will be stored. Make sure to create a Personal Access Token (PAT) with read & write access to the given repository.

IAM

This is where all the Identity and Access Management information is stored.

Cloudtrail

This will log every single operation that occurs on an AWS account.

S3 bucket

This is where all the logs of Cloudtrail are stored.

EventBridge

This will make it easy to build event-driven applications. In this case, the events we are looking for are IAM changes. We can configure EventBridge to notify/react whenever an IAM change event occurs.

Lambda

This will run the given code/container. We don't need to own a server to execute code. In this case, it will be triggered by the EventBridge when an IAM change occurs.

Cloudwatch

This is where the Lambda logs are stored.

ECR

Elastic Container Registry (ECR) will be used by Lambda to fetch the container image that it has to run.

Trust Boundaries

Lambda to ECR

Lambda has permission to pull images from ECR.

Lambda to IAM

Lambda has permission to list roles & policies.

Lambda to Organizations

Lambda has permissions to list SCPs.

Lambda to GitHub

Lambda uses GitHub tokens to interact with the GitHub API.

EventBridge to Lambda

EventBridge has permissions to trigger the Lambda function.

CloudTrail to S3

CloudTrail has permission to write logs to the S3 bucket.

EventBridge to CloudTrail

EventBridge reads from CloudTrail to trigger events.

Workflow

Initialization

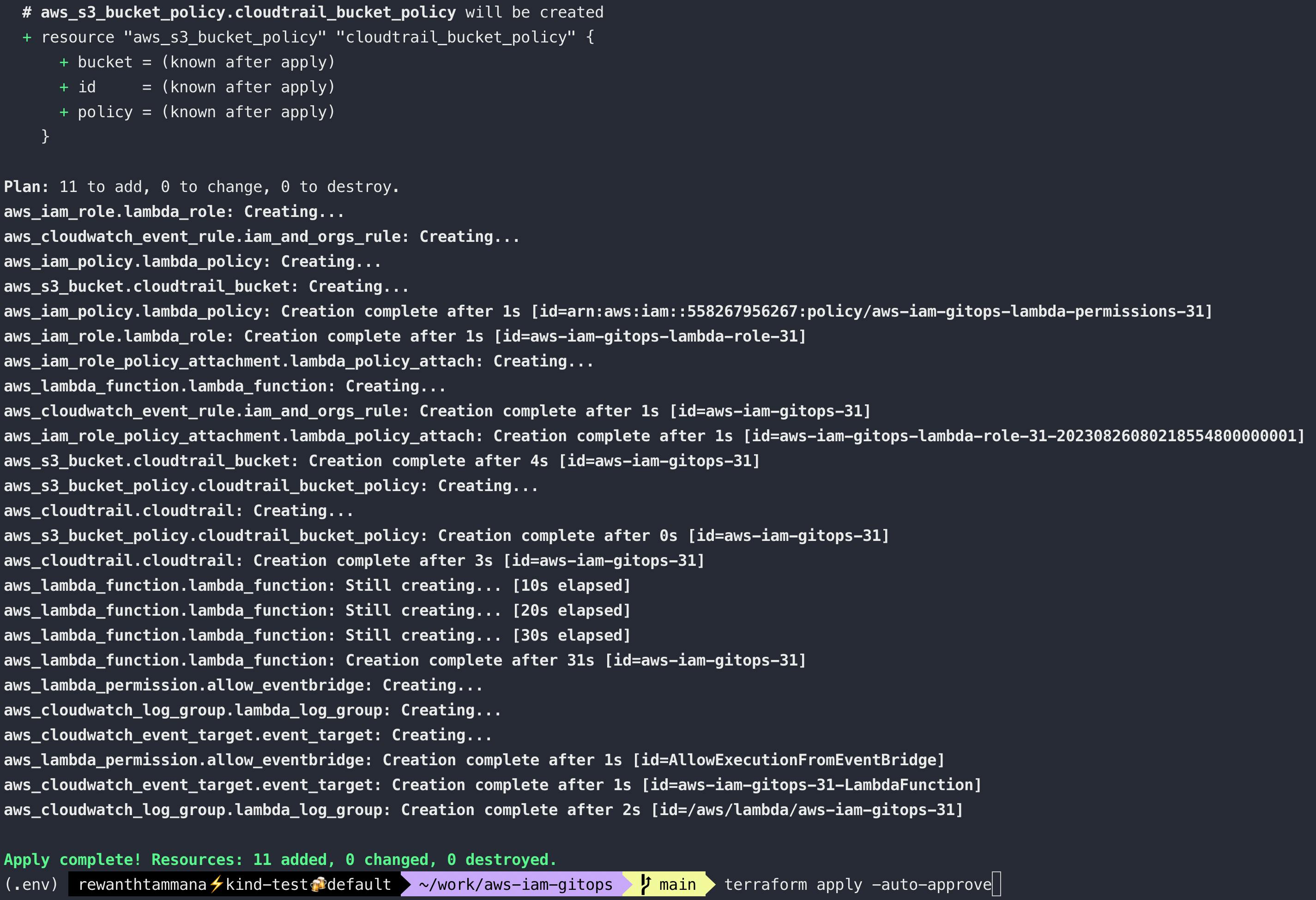

Terraform script sets up all AWS resources.

The lambda function clones the GitHub repo.

Event Trigger

Any change in AWS IAM or Organizations is logged by CloudTrail.

EventBridge picks up the change and triggers the Lambda function.

Lambda Execution

The lambda function lists all IAM roles, policies, and SCPs.

Write this information to the GitHub repo.

Commit to Github

- The lambda function commits and pushes the changes to the GitHub repo.

Terraform Destroy

- Optionally,

terraform destroycan be used to remove all AWS resources, except for those not created by it.

Logging

- All Lambda function logs are stored in CloudWatch Log Groups.

Building systems - One click

# Change environment variables (MUST/MANDATORY)

# Make sure TF_VAR_GITHUB_REPO exists on your GitHub

export TF_VAR_GITHUB_USERNAME=rewanthtammana

export TF_VAR_GITHUB_REPO=testaws

export TF_VAR_GITHUB_TOKEN=

# Change environment variables (Optional)

export TF_VAR_ECR_REPO_NAME=aws-iam-gitops

# Change environment variables (Optional - the suffix is used in image name, role name, lambda function name, policy name, event bridge name, s3 bucket name & cloud trail name)

export TF_VAR_RANDOM_SUFFIX=31

export TF_VAR_RANDOM_PREFIX=aws-iam-gitops

# Change environment variables - Recommended to leave them as it is but feel free to change them

export TF_VAR_ECR_REPO_TAG=v${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_AWS_PAGER=

export TF_VAR_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

export TF_VAR_REGION=us-east-1

export TF_VAR_ROLE_NAME=${TF_VAR_RANDOM_PREFIX}-lambda-role-${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_IMAGE=${TF_VAR_ACCOUNT_ID}.dkr.ecr.${REGION}.amazonaws.com/${TF_VAR_ECR_REPO_NAME}:${TF_VAR_ECR_REPO_TAG}

export TF_VAR_LAMBDA_FUNCTION_NAME=${TF_VAR_RANDOM_PREFIX}-${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_POLICY_NAME=${TF_VAR_RANDOM_PREFIX}-lambda-permissions-${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_LAMBDA_TIMEOUT=120

export TF_VAR_EVENTBRIDGE_NAME=${TF_VAR_RANDOM_PREFIX}-${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_S3_BUCKET_NAME=${TF_VAR_RANDOM_PREFIX}-${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_CLOUDTRAIL_NAME=${TF_VAR_RANDOM_PREFIX}-${TF_VAR_RANDOM_SUFFIX}

# AWS components

aws ecr create-repository --repository-name ${TF_VAR_ECR_REPO_NAME}

aws ecr get-login-password --region ${TF_VAR_REGION} | docker login --username AWS --password-stdin ${TF_VAR_ACCOUNT_ID}.dkr.ecr.${TF_VAR_REGION}.amazonaws.com

docker build --platform linux/amd64 --build-arg GITHUB_USERNAME=${TF_VAR_GITHUB_USERNAME} --build-arg GITHUB_REPO=${TF_VAR_GITHUB_REPO} --build-arg GITHUB_TOKEN=${TF_VAR_GITHUB_TOKEN} -t ${TF_VAR_IMAGE} .

docker push ${TF_VAR_IMAGE}

terraform init

terraform apply

Building systems - TLDR

GitHub

Make sure to create a Personal Access Token (PAT) with read & write access to the given repository.

Initialize variables

We will use Terraform to build the entire system except for ECR. Focus only on the variables that need to be changed, the other variables can be ignored.

# Change environment variables (MUST/MANDATORY)

# Make sure TF_VAR_GITHUB_REPO exists on your GitHub

export TF_VAR_GITHUB_TOKEN=

export TF_VAR_GITHUB_USERNAME=rewanthtammana

export TF_VAR_GITHUB_REPO=testaws

# Change environment variables (Optional)

export TF_VAR_ECR_REPO_NAME=aws-iam-gitops

# Change environment variables (Optional - the suffix is used in image name, role name, lambda function name, policy name, event bridge name, s3 bucket name & cloud trail name)

export TF_VAR_RANDOM_SUFFIX=31

export TF_VAR_RANDOM_PREFIX=aws-iam-gitops

# Change environment variables - Recommended to leave them as it is but feel free to change them

export TF_VAR_ECR_REPO_TAG=v${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_AWS_PAGER=

export TF_VAR_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

export TF_VAR_REGION=us-east-1

export TF_VAR_ROLE_NAME=${TF_VAR_RANDOM_PREFIX}-lambda-role-${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_IMAGE=${TF_VAR_ACCOUNT_ID}.dkr.ecr.${REGION}.amazonaws.com/${TF_VAR_ECR_REPO_NAME}:${TF_VAR_ECR_REPO_TAG}

export TF_VAR_LAMBDA_FUNCTION_NAME=${TF_VAR_RANDOM_PREFIX}-${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_POLICY_NAME=${TF_VAR_RANDOM_PREFIX}-lambda-permissions-${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_LAMBDA_TIMEOUT=120

export TF_VAR_EVENTBRIDGE_NAME=${TF_VAR_RANDOM_PREFIX}-${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_S3_BUCKET_NAME=${TF_VAR_RANDOM_PREFIX}-${TF_VAR_RANDOM_SUFFIX}

export TF_VAR_CLOUDTRAIL_NAME=${TF_VAR_RANDOM_PREFIX}-${TF_VAR_RANDOM_SUFFIX}

Lambda function

Let's start with writing a Lambda function that will sync the desired IAM resources to GitHub. I will use Python for the time being but feel free to use any supported tech stack.

To summarize the below code,

We define a

handlerfunction that will be used by AWS Lambda for execution.def handler(event, context): #.....Import

boto3Python SDK to fetch AWS IAM information.# AWS clients iam = boto3.client('iam') orgs = boto3.client('organizations')Define placeholders for all required variables. DO NOT CHANGE THEM.

# GitHub credentials github_username = "GITHUB_USERNAME" github_token = "GITHUB_TOKEN" github_repo_name = "GITHUB_REPO"Clone the destination repository where you want to push your code. Several limitations with consuming direct APIs from PyGithub, hence we will use the old way.

random_string = str(randrange(100, 100000)) local_repo = f"/tmp/{random_string}" repo_url = f"https://{github_username}:{github_token}@github.com/{github_username}/{github_repo_name}.git" clone_command = f"git clone --depth 1 {repo_url} {local_repo}" os.system(clone_command)Fetch the IAM information for Roles, Policies & SCPs. By default AWS APIs return only 100 results, hence we have to paginate the resources for a complete list. Once we have them, create a file for each entry on GitHub.

# Create new folders and populate them for client, category in [(iam, "roles"), (iam, "policies"), (orgs, "scps")]: os.makedirs(category, exist_ok=True) items = [] if category == "roles": paginator = client.get_paginator('list_roles') for page in paginator.paginate(): items.extend(page['Roles']) elif category == "policies": paginator = client.get_paginator('list_policies') for page in paginator.paginate(Scope='All'): items.extend(page['Policies']) else: # category == "scps" paginator = orgs.get_paginator('list_policies') for page in paginator.paginate(Filter='SERVICE_CONTROL_POLICY'): items.extend(page['Policies']) for item in items: policy_detail = orgs.describe_policy(PolicyId=item['Id']) item.update(policy_detail['Policy']) for item in items: file_name = f"{item['RoleName']}.json" if category == "roles" else f"{item['PolicyName']}.json" if category == "policies" else f"{item['Id']}.json" with open(f"{category}/{file_name}", "w") as f: json.dump(prettify_json(item), f, default=str, indent=4)After all the changes, push the code to GitHub.

Container image/Dockerfile

To achieve consistency, it's always recommended to run an application from a container image. We will package the above code into a container image & feed it to Lambda for execution.

Import the base image that's supported by Lambda runtime.

FROM public.ecr.aws/lambda/python:3.11Copy the list of packages required from

requirements.txtCOPY requirements.txt ${LAMBDA_TASK_ROOT}If you remember, we have marked some placeholders in the above python code. We will mark them as arguments here & they will be replaced accordingly during docker build.

ARG GITHUB_USERNAME ARG GITHUB_REPO ARG GITHUB_TOKEN # Copy function code COPY lambda_function.py ${LAMBDA_TASK_ROOT} RUN sed -i "s/GITHUB_USERNAME/$GITHUB_USERNAME/g" ${LAMBDA_TASK_ROOT}/lambda_function.py RUN sed -i "s/GITHUB_REPO/$GITHUB_REPO/g" ${LAMBDA_TASK_ROOT}/lambda_function.py RUN sed -i "s/GITHUB_TOKEN/$GITHUB_TOKEN/g" ${LAMBDA_TASK_ROOT}/lambda_function.pyInstall all the required packages

# Install the specified packages RUN pip install -r requirements.txt RUN yum update && yum -y install gitReference the handler function as a starting point for the container when it starts.

# Set the CMD to your handler (could also be done as a parameter override outside of the Dockerfile) CMD [ "lambda_function.handler" ]

Push image to Elastic Container Registry (ECR)

We need to create an ECR repository.

aws ecr create-repository --repository-name ${TF_VAR_ECR_REPO_NAME}Login to the ECR repository

aws ecr get-login-password --region ${TF_VAR_REGION} | docker login --username AWS --password-stdin ${TF_VAR_ACCOUNT_ID}.dkr.ecr.${TF_VAR_REGION}.amazonaws.comBuild the container image.

docker build --platform linux/amd64 --build-arg GITHUB_USERNAME=${TF_VAR_GITHUB_USERNAME} --build-arg GITHUB_REPO=${TF_VAR_GITHUB_REPO} --build-arg GITHUB_TOKEN=${TF_VAR_GITHUB_TOKEN} -t ${TF_VAR_IMAGE} .

Push it to the ECR repository.

docker push ${TF_VAR_IMAGE}

Terraform - IAM, Lambda, EventBridge, Cloudtrail, Cloudwatch, S3

Terraform sets up a robust AWS infrastructure. We will have to set up the below things.

Provider Block

This block specifies that we are using AWS as our cloud provider and sets the AWS region to us-east-1.

provider "aws" {

region = "us-east-1"

}

Variable Blocks

Here, we define various variables that will be used throughout the script. For example, GITHUB_USERNAME will store the GitHub username. Similarly, we define other variables like GITHUB_REPO, GITHUB_TOKEN, ECR_REPO_NAME, etc. These are extracted from the environment variables we initialized before.

variable "GITHUB_USERNAME" {

description = "GitHub username"

type = string

}

variable "GITHUB_REPO" {

description = "GitHub repository name"

type = string

}

variable "GITHUB_TOKEN" {

description = "GitHub Personal Access Token"

type = string

sensitive = true

}

variable "ECR_REPO_NAME" {

description = "ECR Repository Name"

type = string

}

variable "RANDOM_SUFFIX" {

description = "Random Suffix for Resource Names"

type = string

}

variable "ECR_REPO_TAG" {

description = "ECR Repository Tag"

type = string

}

variable "AWS_PAGER" {

description = "AWS Pager Environment Variable"

type = string

default = ""

}

variable "ACCOUNT_ID" {

description = "AWS Account ID"

type = string

}

variable "REGION" {

description = "AWS Region"

type = string

}

variable "ROLE_NAME" {

description = "IAM Role Name"

type = string

}

variable "IMAGE" {

description = "Docker Image URI"

type = string

}

variable "LAMBDA_FUNCTION_NAME" {

description = "Lambda Function Name"

type = string

}

variable "POLICY_NAME" {

description = "IAM Policy Name"

type = string

}

variable "LAMBDA_TIMEOUT" {

description = "Lambda Function Timeout"

type = number

default = 120

}

variable "EVENTBRIDGE_NAME" {

description = "EventBridge name"

type = string

}

variable "S3_BUCKET_NAME" {

description = "S3 Bucket"

type = string

}

variable "CLOUDTRAIL_NAME" {

description = "Cloudtrail name"

type = string

}

Local Values

Local values are convenient names or computations that are used multiple times within a module. Here, we define a local value account_id to store the AWS account ID, which is fetched using data.aws_caller_identity.current.account_id & more.

locals {

account_id = data.aws_caller_identity.current.account_id

region = var.REGION

ecr_repo_name = var.ECR_REPO_NAME

ecr_repo_tag = var.ECR_REPO_TAG

github_username = var.GITHUB_USERNAME

github_repo = var.GITHUB_REPO

github_token = var.GITHUB_TOKEN

role_name = var.ROLE_NAME

lambda_function_name = var.LAMBDA_FUNCTION_NAME

policy_name = var.POLICY_NAME

lambda_timeout = var.LAMBDA_TIMEOUT

eventbridge_name = var.EVENTBRIDGE_NAME

s3_bucket_name = var.S3_BUCKET_NAME

cloudtrail_name = var.CLOUDTRAIL_NAME

}

Data Blocks

This data block fetches the current AWS account ID, user ID, and ARN, which can be used in other resources.

data "aws_caller_identity" "current" {}

IAM Role for Lambda

This block creates an AWS IAM role that can be used by our Lambda function.

resource "aws_iam_role" "lambda_role" {

name = local.role_name

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Action = "sts:AssumeRole",

Effect = "Allow",

Principal = {

Service = "lambda.amazonaws.com"

}

}

]

})

}

IAM Policy for Lambda

We define an IAM policy that allows the Lambda function to perform desired operations like creating CloudWatch Logs, listing IAM & organization policies, describing organization policies, etc.

resource "aws_iam_policy" "lambda_policy" {

name = local.policy_name

description = "Policy for Lambda function"

policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow",

Action = [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

Resource = "arn:aws:logs:${local.region}:${local.account_id}:log-group:/aws/lambda/${local.lambda_function_name}:*"

},

{

Effect = "Allow",

Action = [

"iam:ListPolicies",

"iam:ListRoles",

"organizations:ListPolicies",

"organizations:DescribePolicy"

],

Resource = "*"

},

{

Effect = "Allow",

Action = "kms:Decrypt",

Resource = "arn:aws:kms:${local.region}:${local.account_id}:key/*"

}

]

})

}

Attach Policy to Role

This block attaches the IAM policy to the IAM role that will be used by the Lambda function.

resource "aws_iam_role_policy_attachment" "lambda_policy_attach" {

role = aws_iam_role.lambda_role.name

policy_arn = aws_iam_policy.lambda_policy.arn

}

Lambda Function

This block defines the Lambda function, specifying its name, role, and other configurations.

resource "aws_lambda_function" "lambda_function" {

function_name = local.lambda_function_name

role = aws_iam_role.lambda_role.arn

package_type = "Image"

image_uri = "${local.account_id}.dkr.ecr.${local.region}.amazonaws.com/${local.ecr_repo_name}:${local.ecr_repo_tag}"

architectures = ["x86_64"]

timeout = local.lambda_timeout

}

CloudWatch Log Group

This block creates a CloudWatch Log Group where the Lambda function's logs will be stored. We set a retention period of 7 days but it's customizable.

resource "aws_cloudwatch_log_group" "lambda_log_group" {

name = "/aws/lambda/${aws_lambda_function.lambda_function.function_name}"

retention_in_days = 7

}

S3 Bucket for CloudTrail

This block creates an S3 bucket that will be used by CloudTrail for logging.

resource "aws_s3_bucket" "cloudtrail_bucket" {

bucket = local.s3_bucket_name

force_destroy = true

}

CloudTrail Configuration

This block sets up CloudTrail, specifying the above-created S3 bucket for logging and other configurations.

resource "aws_s3_bucket_policy" "cloudtrail_bucket_policy" {

bucket = aws_s3_bucket.cloudtrail_bucket.id

policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Sid = "AWSCloudTrailAclCheck"

Effect = "Allow"

Principal = { Service = "cloudtrail.amazonaws.com" }

Action = "s3:GetBucketAcl"

Resource = aws_s3_bucket.cloudtrail_bucket.arn

},

{

Sid = "AWSCloudTrailWrite"

Effect = "Allow"

Principal = { Service = "cloudtrail.amazonaws.com" }

Action = "s3:PutObject"

Resource = "${aws_s3_bucket.cloudtrail_bucket.arn}/*"

Condition = {

StringEquals = { "s3:x-amz-acl" = "bucket-owner-full-control" }

}

}

]

})

}

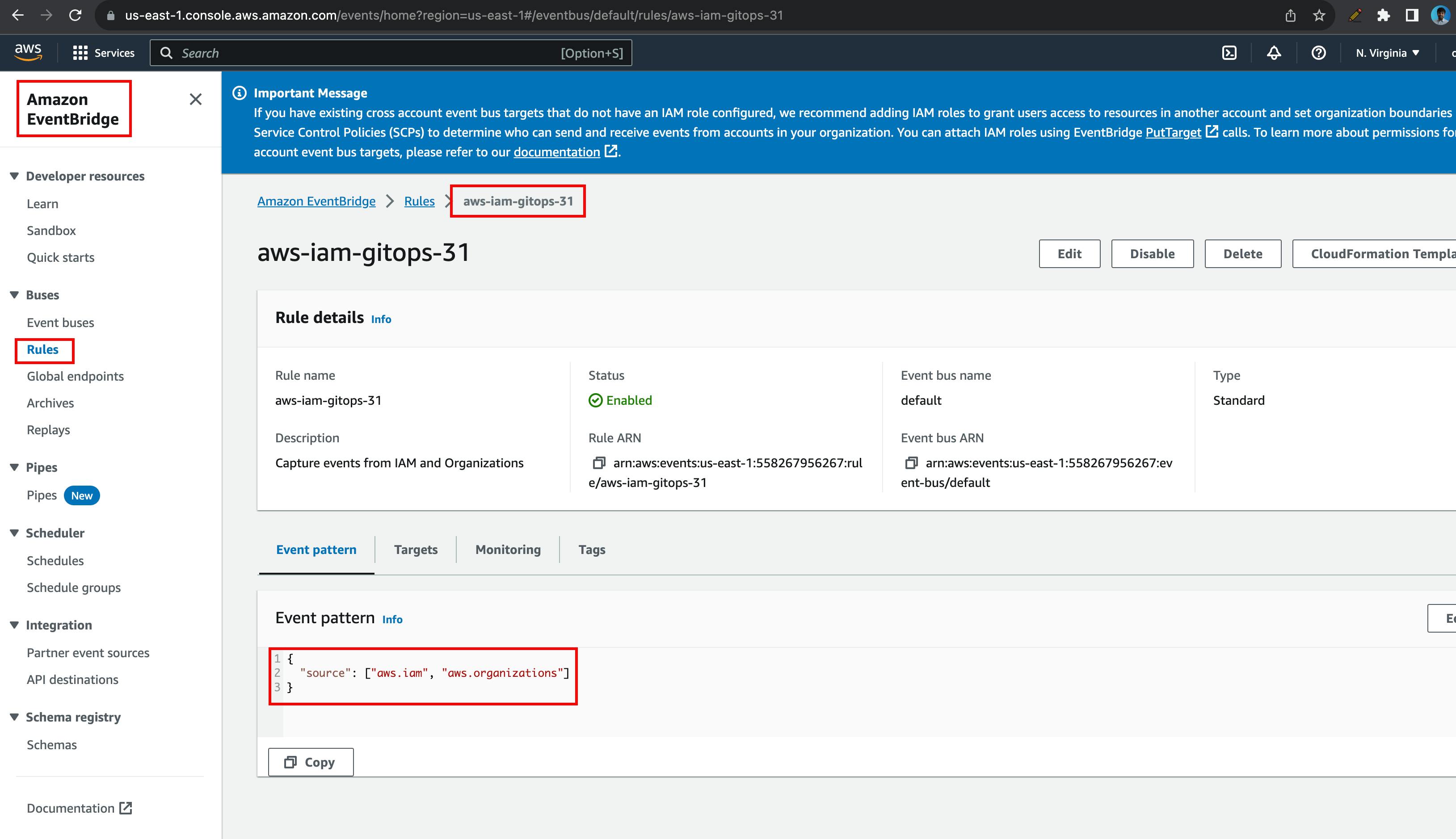

EventBridge Rule

This block creates an EventBridge rule to capture events related to IAM and AWS Organizations. AWS IAM Roles & Policies belong to aws.iam & SCPs belong to aws.organizations. Hence, we need to look for both events.

resource "aws_cloudtrail" "cloudtrail" {

name = local.cloudtrail_name

s3_bucket_name = aws_s3_bucket.cloudtrail_bucket.bucket

enable_logging = true

include_global_service_events = true

is_multi_region_trail = true

enable_log_file_validation = true

}

# Create EventBridge Rule

resource "aws_cloudwatch_event_rule" "iam_and_orgs_rule" {

name = local.eventbridge_name

description = "Capture events from IAM and Organizations"

event_pattern = jsonencode({

"source" : ["aws.iam", "aws.organizations"]

})

}

Lambda Permission for EventBridge

This block allows EventBridge to invoke the Lambda function whenever the rule is triggered.

resource "aws_lambda_permission" "allow_eventbridge" {

statement_id = "AllowExecutionFromEventBridge"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.lambda_function.function_name

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.iam_and_orgs_rule.arn

}

EventBridge Target

This block sets the Lambda function as the target for the EventBridge rule, meaning the Lambda function will be invoked when the rule's conditions are met.

resource "aws_cloudwatch_event_target" "event_target" {

rule = aws_cloudwatch_event_rule.iam_and_orgs_rule.name

target_id = "LambdaFunction"

arn = aws_lambda_function.lambda_function.arn

}

Test the setup

There are many floating components. We need to test it one by one to make sure all configurations are appropriate.

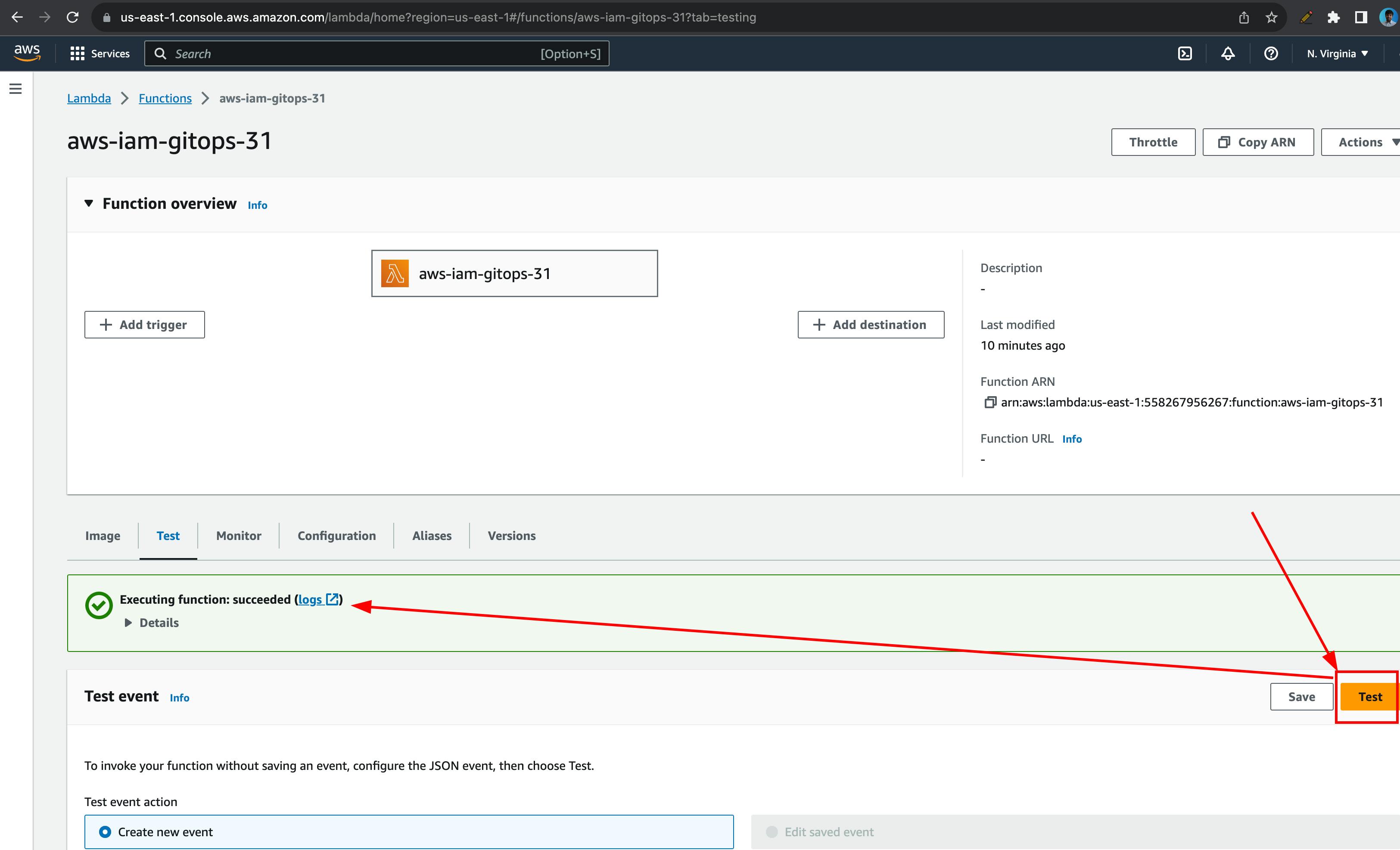

Lambda

Lambda is the core of our operations. To ensure it has required permissions, visit the lambda function & click on "Test". If successful, all good. If not, fix the errors.

Click on the logs above to view the lambda output or for debugging purposes

IAM

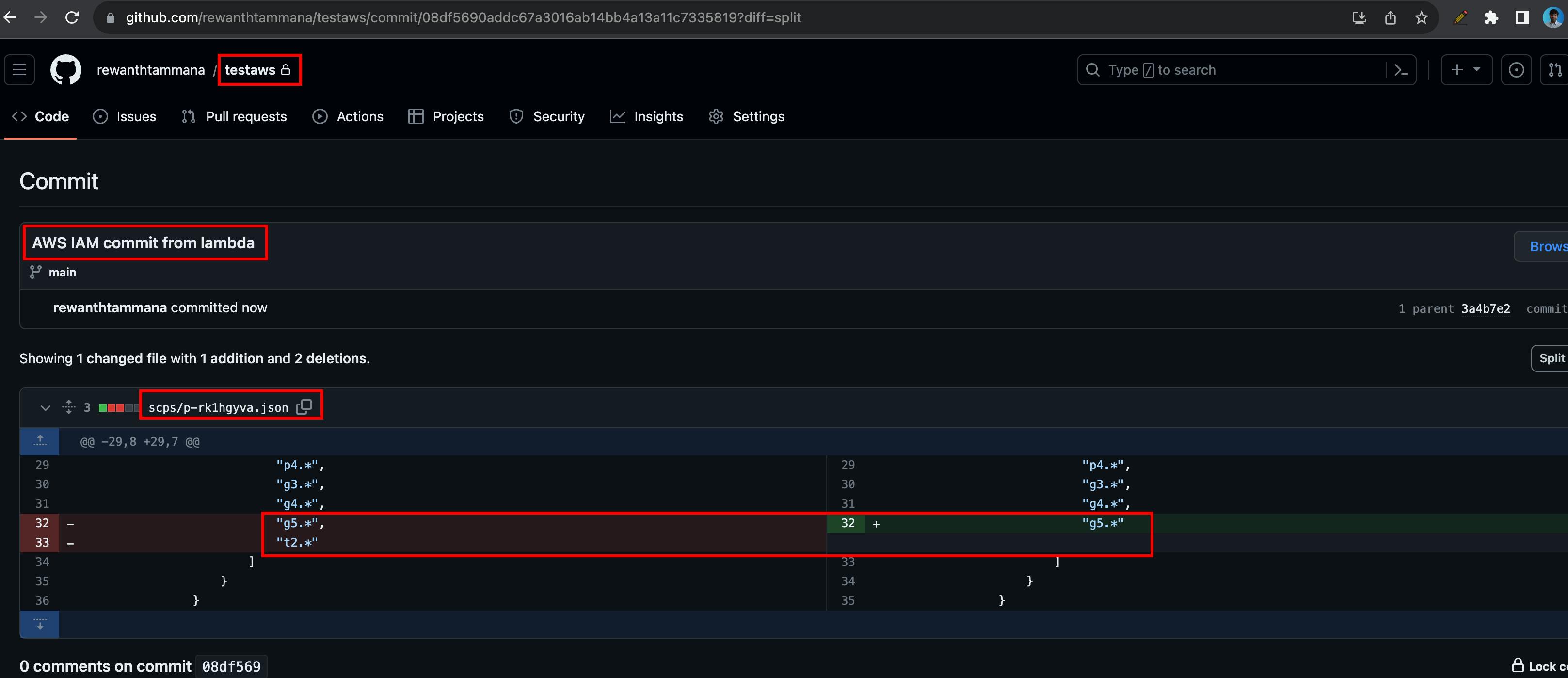

If everything is successful at Lambda, let's try to change an IAM policy & see if our components can detect it & forward the requests to Lambda. I love SCPs, hence I will try to edit an existing rule.

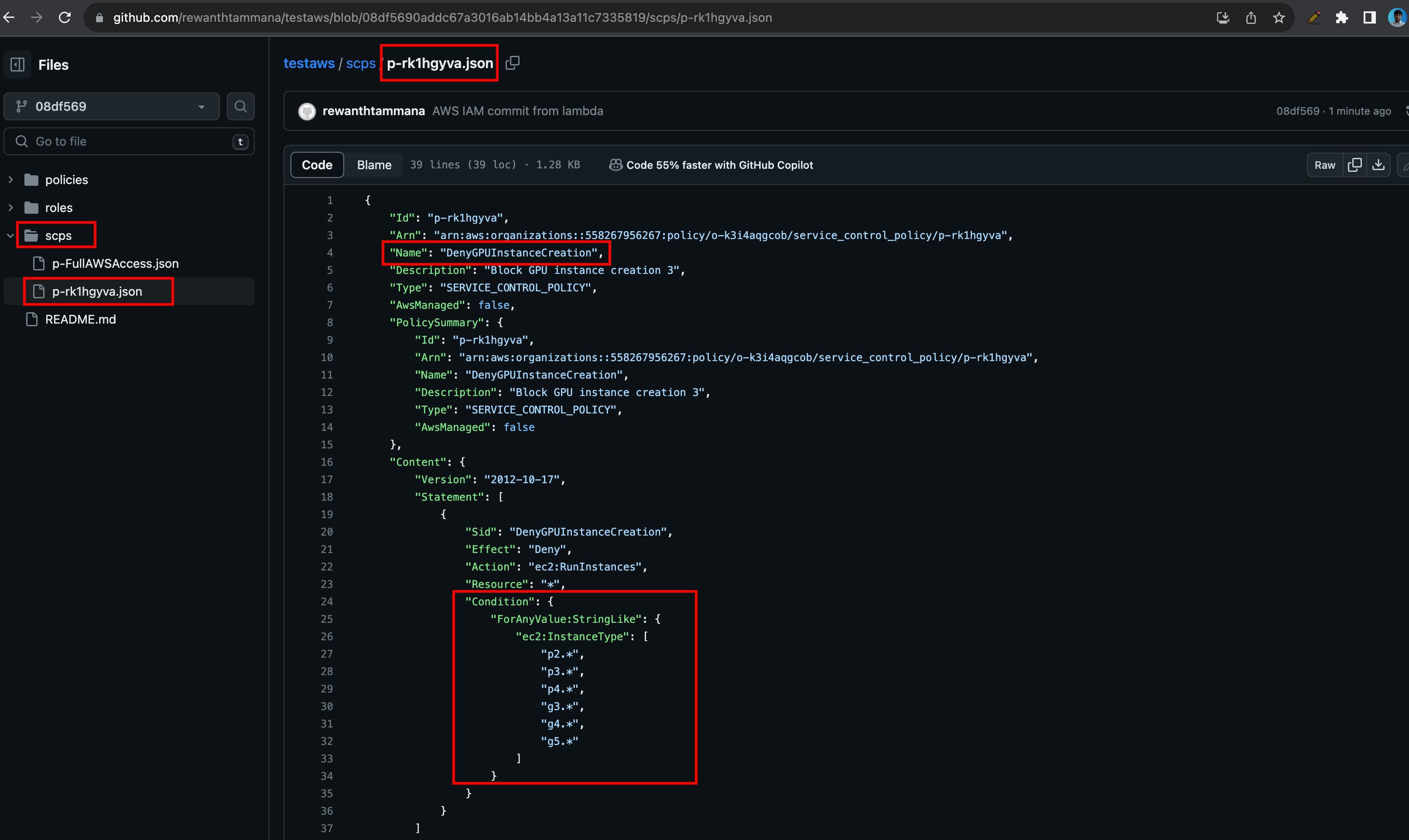

If you visit GitHub, you should see a commit with the updated SCP policy.

You can view the full file & the updated policy information.

If you are interested more, you can look at CloudWatch logs for Lambda output, EventBridge, etc.

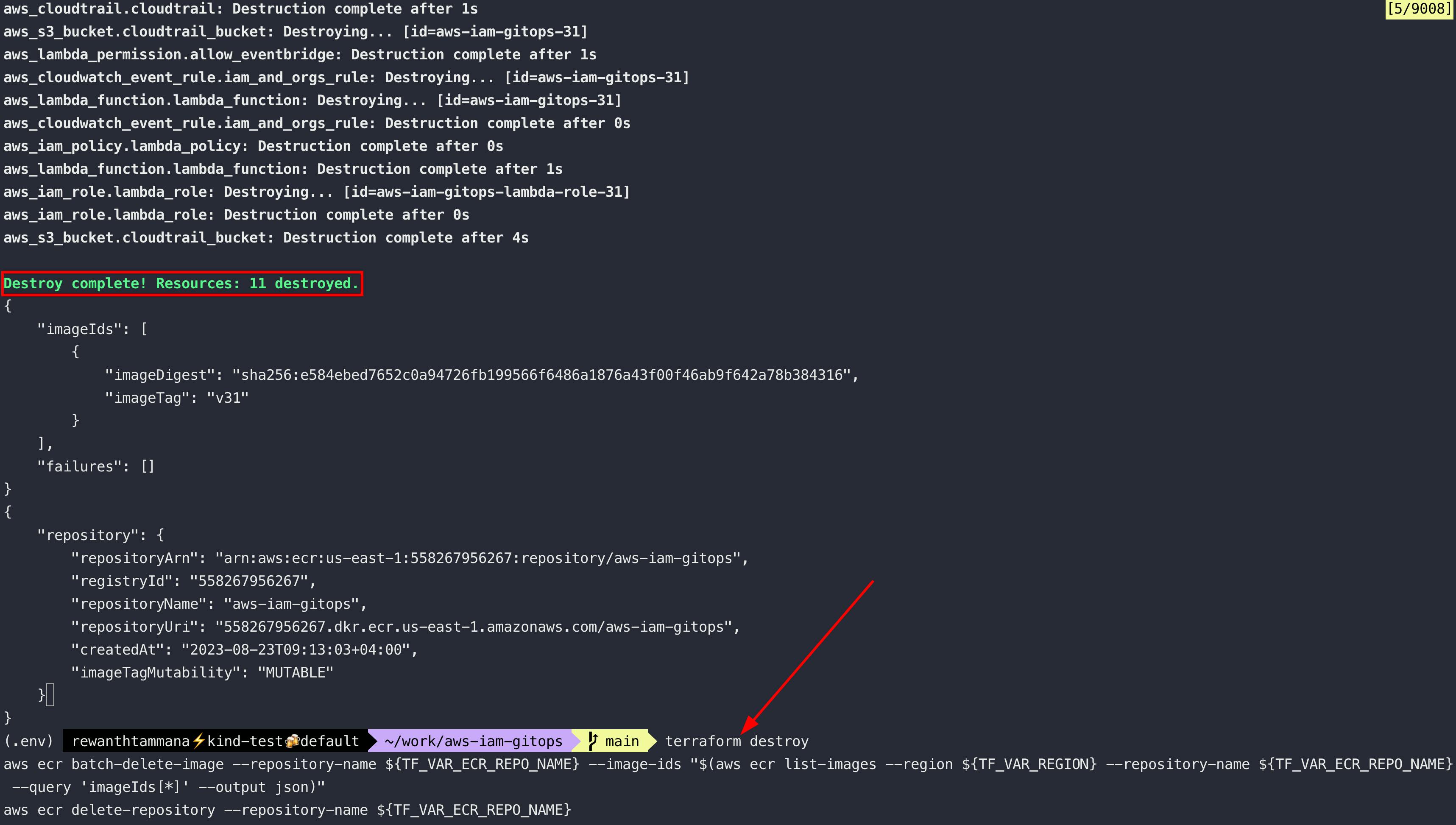

Delete resources

# Delete terraform resources

terraform destroy

# Delete all images in the ECR repository

aws ecr batch-delete-image --repository-name ${TF_VAR_ECR_REPO_NAME} --image-ids "$(aws ecr list-images --region ${TF_VAR_REGION} --repository-name ${TF_VAR_ECR_REPO_NAME} --query 'imageIds[*]' --output json)"

# Delete ECR repository

aws ecr delete-repository --repository-name ${TF_VAR_ECR_REPO_NAME}

Further enhancements

So far, we made sure any changes on the AWS IAM are synchronized with GitHub. Once we have all the data on GitHub, we can code GitHub Actions to sync its policies with AWS IAM on cloud side to complete pure GitOps approach.

Conclusion

In conclusion, we've walked you through a hands-on project that employs a range of AWS services, including Lambda, EventBridge, CloudTrail, CloudWatch, ECR, and S3, all orchestrated through Terraform, Docker & AWS CLI. This guide serves as a robust starting point for anyone new to AWS, offering a practical way to gain hands-on experience. As you continue your journey in cloud computing, remember that the principles of GitOps can be applied far beyond IAM, serving as a foundational approach to cloud infrastructure management.