Enhancing the security audit logging of Harbor with OpenResty

Why and how I achieved the audit logging functionality in Harbor Private Container Registry

TL;DR

In this blog post, we will be looking at some of the problems I have seen in Harbor private registry security audit logging with some possible solutions to meet security standards and requirements and finally, how I achieved the goal by leveraging the OpenResty scripting abilities to perform better security audit logging.

By the end of the blog post, you can leverage security audit logging for Harbor private registry to achieve security compliance requirements for your container registry environments in Harbor.

What is Harbor?

Before we deep dive into the problems and solutions, let’s sneak into Harbor and how companies use it.

Harbor is an open-source registry that secures artifacts with policies and role-based access control, ensures images are scanned and free from vulnerabilities, and signs images as trusted. Harbor, a CNCF Graduated project, delivers compliance, performance, and interoperability to help you consistently and securely manage artifacts across cloud-native compute platforms like Kubernetes and Docker. — goharbor.io

Problems we have with Harbor — The Why?

I think Harbor is a great open source project and it already helps to solve most of the problems in the work I do, but when it comes to the Security Standards and requirements of compliance, it doesn't have a mechanism to perform audit logging functionality. As most of the compliance standards want to have visibility and audit tracing to understand who did what, when, and how? We want similar visibility in the Harbor that can explain who accessed the registry, modified scan settings, tagged images, etc.

The current logs don't explain who & what's being modified. It's a huge setback & game stopper.

As container registry is one of the most key aspects of the whole supply chain security, it becomes even more critical to have an understanding of what’s happening by leveraging visibility and proactive monitoring.

Here are some possible solutions I came up with

As I have a clear problem statement, I have started tinkering with existing solutions and possible solutions to solve this. So here are some of the possible things I can do to solve this problem includes,

- Adding the custom middleware to the harbor-core to implement custom security audit logging to enable required

- Customize Harbor codebase to add new logging modules at different Microservice level

- Add customized event controllers to Harbor for logging

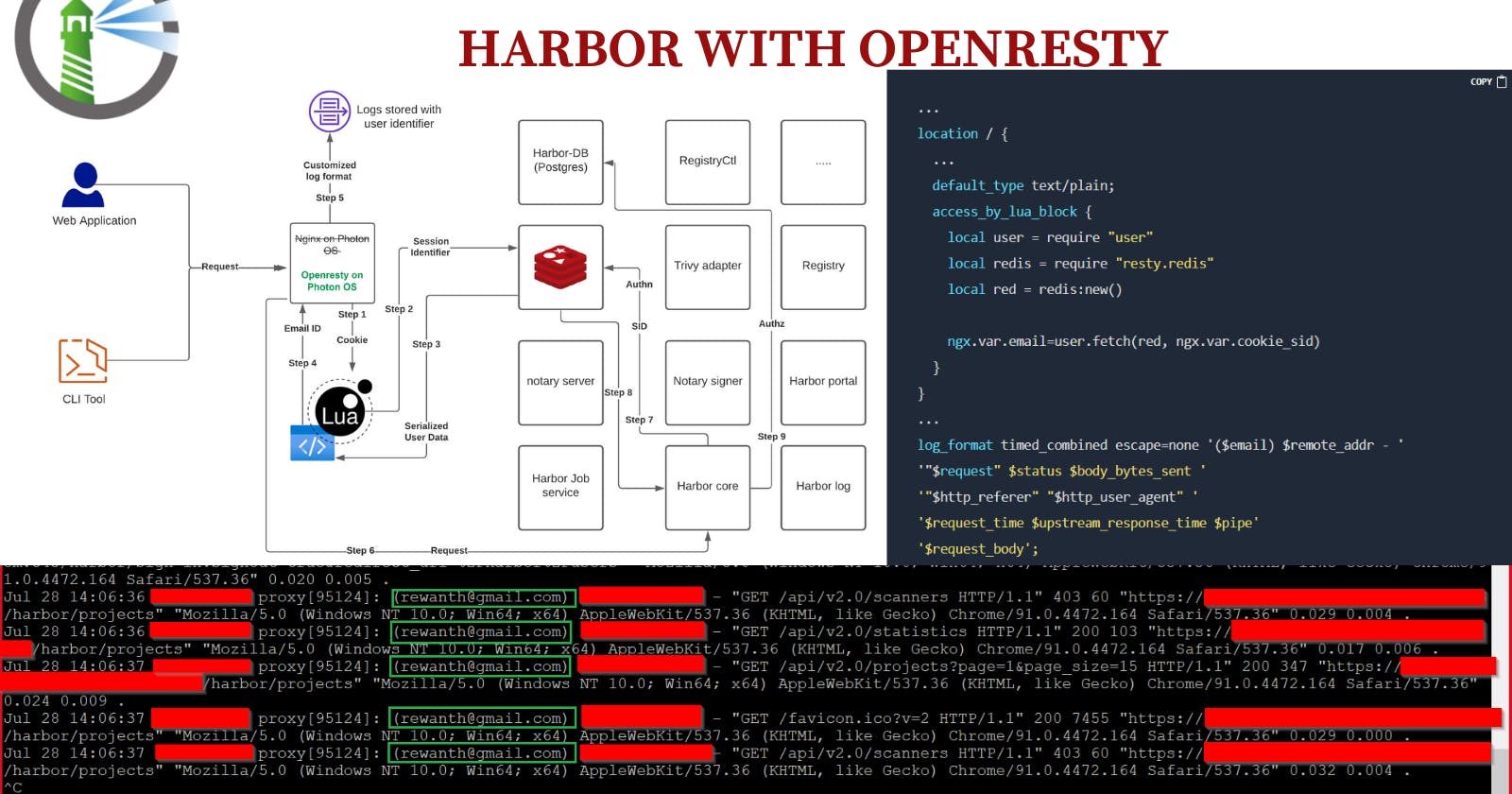

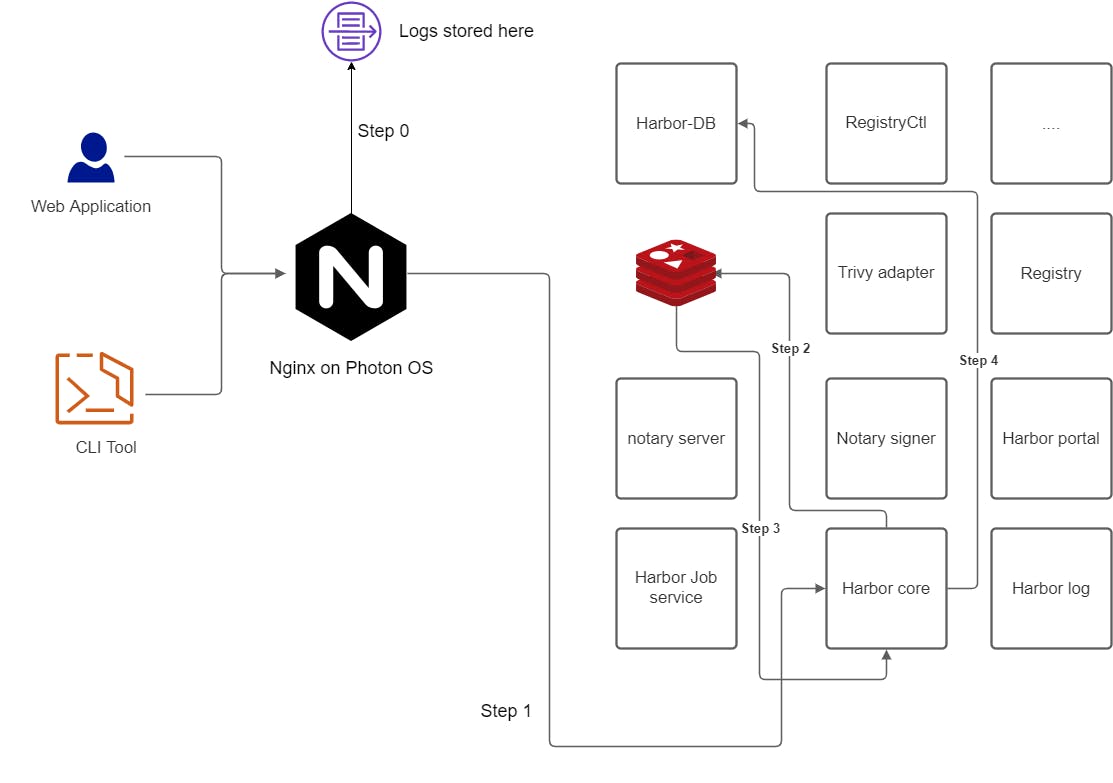

Current workflow

Harbor spins up 11+ microservices on start-up. Nginx acts as a reverse proxy & forwards the requests to respective components. All the logging happens at the Nginx level.

- Step 0: User request comes & audit logging happens at Nginx

- Step 1: Request goes to Harbor core MS from Nginx MS

- Step 2: Harbor core MS communicates with serialized Redis for authentication checks

- Step 3: Harbor core MS validates the authentication

- Step 4: Harbor core MS connects with Harbor DB (Postgres) for authorization checks

- Step 5: Harbor core MS validates the authorization

- Step 6: Rest of the magic happens!

Default log format configuration

log_format timed_combined '$remote_addr - '

'"$request" $status $body_bytes_sent '

'"$http_referer" "$http_user_agent" '

'$request_time $upstream_response_time $pipe';

With the default configuration & workflow, there's no way for Nginx to log the user information just from the HTTP requests. All the Authentication & Authorization checks happen after the request passes the Nginx stage. To solve this problem, we need to fetch user information at the Nginx level.

Solution for the problem I have chosen — The How?

Here comes the exciting part, I kind of delved into pretty much all the possible solutions. But due to the constraints I have (time, smooth upgrades), I have chosen to go this way.

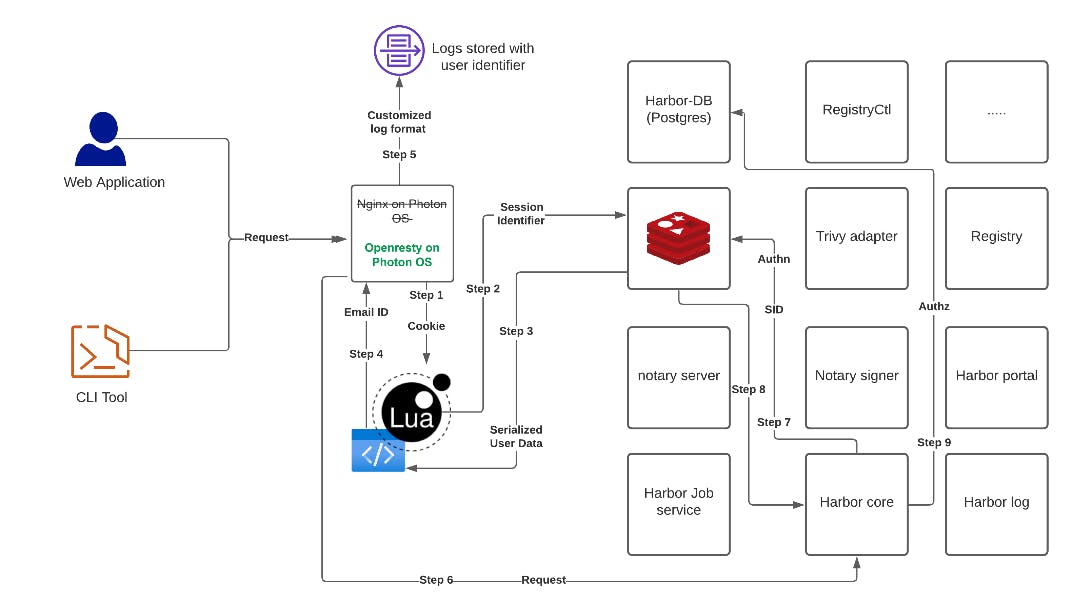

We will replace Nginx with Openresty, query the Redis key-store to fetch user information, pass it to the proxy, save it to the logs.

Some considerations I have to keep an eye for this solution include,

A slight increase in latency due to multiple calls at the proxy level. The latency won't be noticed until we have massive traffic.

Solution for the problem — Technicalities

Though Nginx is a powerful software it lacks programming ability. We need to add programming capabilities to Nginx to communicate with Redis MS to fetch user information. To perform these operations, we have to replace Nginx with Openresty that is having Lua scripting abilities.

With Lua, we will be able to query Redis cache to retrieve the data, extract information from serialized data, store it in the logs at the reverse proxy level and forward the request ahead for the rest of the operations.

The enhanced workflow sequence:

- Step 1: At the Openresty level, we extract the cookies from the user request

- Step 2: We extract the

sidsession identified from the cookies - Step 3: We fetch serialized data associated with

sidfrom Redis key-value store - Step 4: Extract Email ID from the serialized data

- Step 5: Add Email ID to rest of the logging parameters & store it in reverse proxy logs

- Step 6: Forward the initial user request to other MS to do the magic!

Enhanced logging configuration

Along with a bunch of other Lua codes, here, a considerable upgrade has been performed in the logging conf, here

...

location / {

...

default_type text/plain;

access_by_lua_block {

local user = require "user"

local redis = require "resty.redis"

local red = redis:new()

ngx.var.email=user.fetch(red, ngx.var.cookie_sid)

}

}

...

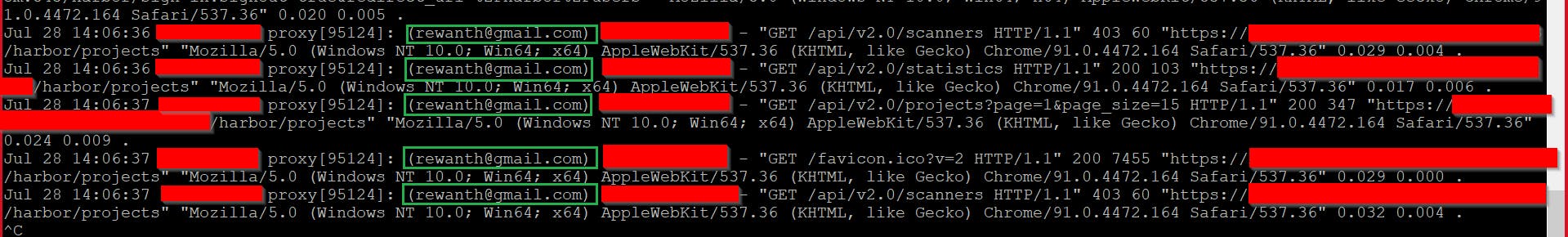

log_format timed_combined escape=none '($email) $remote_addr - '

'"$request" $status $body_bytes_sent '

'"$http_referer" "$http_user_agent" '

'$request_time $upstream_response_time $pipe'

'$request_body';

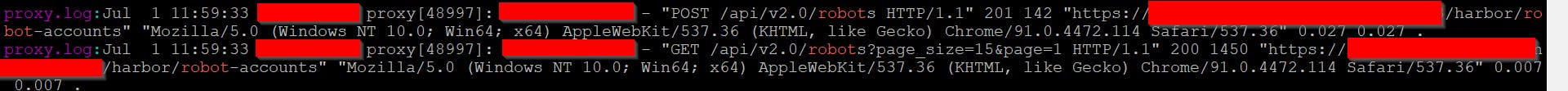

With the above-customized configuration changes, we can see the request body & email ID of the user.

The long why I didn’t choose other solutions

I want to have a solution that solves our problem & also allows us to perform smooth updates. If we choose to tamper with the harbor code base, until our code gets merged with the main branch, we will face massive issues with upgrading the harbor to the latest version.

Conclusion/Summary

I have tried to solve a problem existing since Harbor's inception by replacing Nginx proxy on Photon OS with Openresty, adding Lua scripting to make calls to serialized Redis cache to fetch information based on session id, decrypting serialized data, fetching user information, save it in the logs, and then forward the requests to Harbor-core microservice for the regular flow execution.

NOTE: This solution is definitely not a production level fix & this blog is to just demonstrate the things I tried & learnt in the process of fixing the issue.

Github - https://github.com/rewanthtammana/harbor-enhanced-logging